Background: Billing transparency leads to better patient retention and outcomes

According to a survey publish in the Journal of Patient Experience, nine out of ten patients surveyed reported wanting to know payment responsibilities for services up front, yet only two out of ten were aware of what they would be charged even immediately following an appointment. Similarly, 87% of patients indicated being surprised by a medical bill they received. The intentionally obfuscated nature of medical billing practices erodes trust in the system and creates anxiety around medical procedures and services in general. In another survey of patients mentioned (n=1000), 64% indicated that they had delayed or forgone medical care due to billing concerns. This creates a destructive cycle leading to complications that require costly interventions and strain the system overall.

Our goal with this AI-assisted chatbot is to improve billing clarity and support healthcare literacy such that patient outcomes are improved through more timely care.

Building an MVP

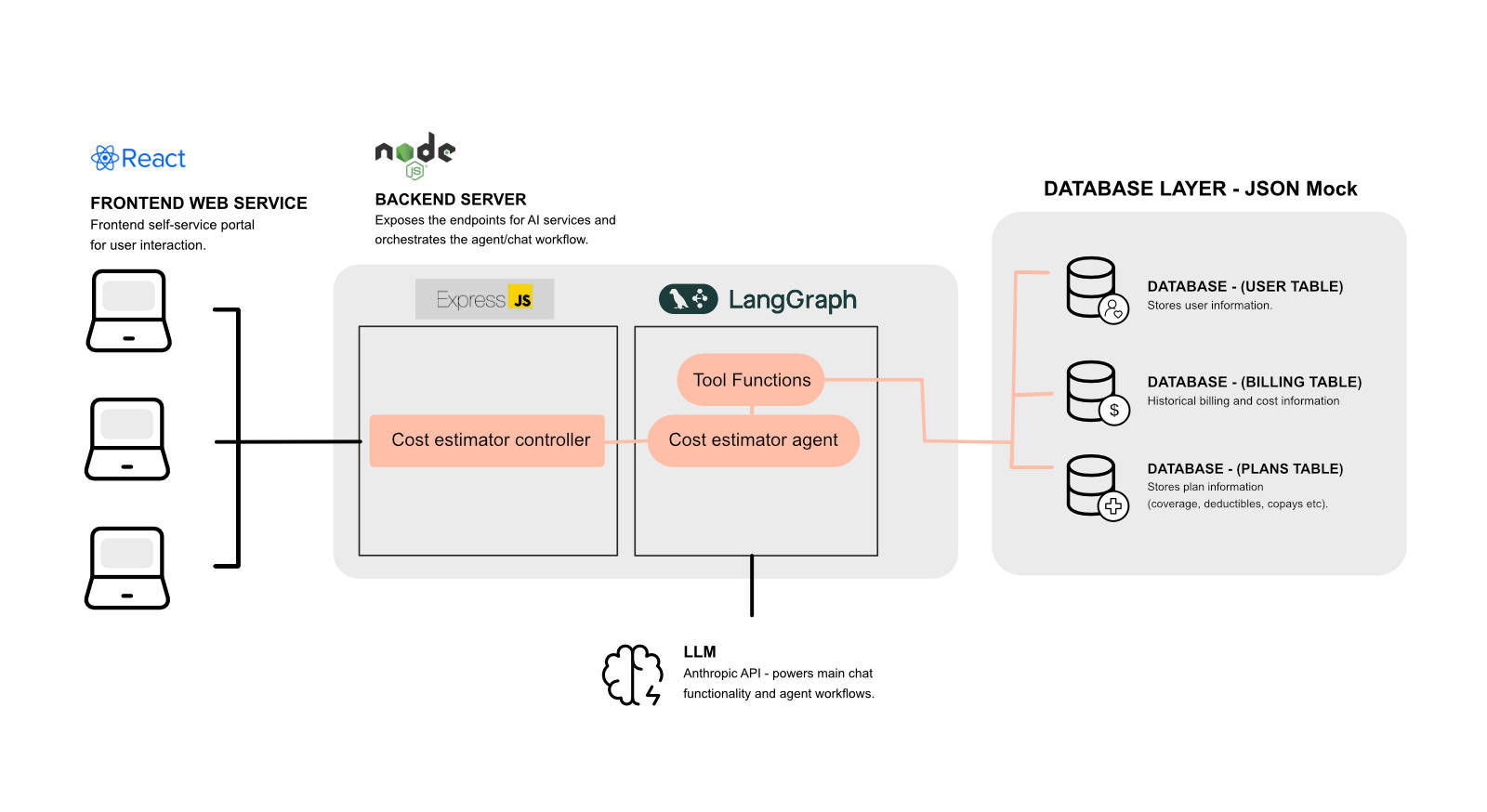

I used LangGraph to create a functional MVP for an agentic, retrieval-augmented AI system that could theoretically be integrated into billing portals and provide assistance with cost-estimation and billing interpretation.

The system is powered by Node.js with a React frontend and mock databases.

The interface is chat-based, but provides additional structured interactions to lower the cognitive load on users and guide them to the information they are searching for.

Results

Our unmoderated testing showed promising results with 16 of 17 participants reporting they would be more likely to seek care and 15 of 17 participants feeling they had better control over their healthcare decisions.

Process and solution deep-dive

Our process was done in two primary stages. First, a lofi Figma prototype was built and run through moderated testing to obtain deeper insights around what potential users would be looking for and how they responded to the idea of the AI assistant in general. Next, I developed a functional MVP using Node.js, React, and LangGraph based on our learnings. A wider sample of unmoderated testing validated areas of interest from the first moderated round at scale.

Development: LangGraph powered agentic RAG

I chose to use LangGraph to build the MVP because of its flexibility. Ultimately, an agent based workflow with a tools node ended up being the optimal setup for what we were looking for, but LangGraph supports much more complex architectures as well.

In our case, I hooked up the agent with two tools, one for looking up procedures, and another for obtaining pricing estimates. A third tool could be broken out for obtaining a user's health plan, but in the MVP this is rolled into the pricing tool.

The video below shows the LangGraph agent in action, calling tools to respond to user input:

Research: Early moderated testing for deeper insights

Moderated sessions showed strong validation of our tool’s usefulness, yet highlighted areas to improve:

Both users completed tasks without help, confirming basic usability, but we saw friction around needing to "click to reveal" estimates and confusion about deductible terms.

Users liked the definitions provided but wanted more support, so we added common follow-up questions and made deductible explanations easier to access.

Trust remained a key theme — users wanted transparency into the math behind estimates — and both said the tool could ease anxiety around costs and encourage future care-seeking.

Research: Unmoderated testing for validation at scale

For the unmoderated, we saw meaningful gains in confidence and control. After using the prototype:

Average confidence in predicting procedure costs rose from 6.79 to 7.53 (scale of 10)

Confidence in understanding medical bills climbed from 7.68 to 7.88 (scale of 10)

Sixteen of seventeen users said the tool would make them more likely to seek care

Fifteen of seventeen users felt more in control of healthcare decisions

On the topic of trust…

When we asked if a simple disclaimer — “This estimate is based on 50 similar procedures in your area” — would help, twelve said yes.

Still, a small group remained uneasy about relying on an AI-driven chatbot, reflecting a broader hesitancy to trust automated systems in healthcare. This tells us that when you pair AI with high-stakes health decisions, transparency around data sources, clear explanations of methodology, and familiar human anchors are non-negotiable.

These results demonstrate clear proof of concept: users not only completed tasks successfully in an unmoderated environment, but reported measurable boosts in confidence and intent to act on care. At the same time, a handful still wanted deeper explanations, and a few remain hesitant about chatbots in healthcare, pointing to opportunities for richer transparency layers and alternative non-chat interfaces in future iterations.

Outcomes: Learning and future directions

The results of this project are promising for AI's potential to help patients feel in better control of their healthcare. Several other avenues that were not explored as thoroughly as the the cost-estimation functionality were a bill interpreter assistant and a healthcare comparison tool. I would like to explore both these possibilities further using a similar approach and architecture to create the interface and assistant.

Research across all three tools could help reveal more ways to help connect patients with their care and match expectations to outcomes on the financial side.